Notice

Recent Posts

Recent Comments

Link

| 일 | 월 | 화 | 수 | 목 | 금 | 토 |

|---|---|---|---|---|---|---|

| 1 | ||||||

| 2 | 3 | 4 | 5 | 6 | 7 | 8 |

| 9 | 10 | 11 | 12 | 13 | 14 | 15 |

| 16 | 17 | 18 | 19 | 20 | 21 | 22 |

| 23 | 24 | 25 | 26 | 27 | 28 |

Tags

- 시각화

- 태블로

- sklearn

- 데이터 전처리

- Google ML Bootcamp

- 딥러닝

- 회귀분석

- 파이썬

- SQLD

- 데이터분석준전문가

- 자격증

- IRIS

- Python

- tableau

- Deep Learning Specialization

- 통계

- 데이터분석

- ADsP

- 데이터 분석

- 머신러닝

- pytorch

- 코딩테스트

- pandas

- SQL

- 이코테

- r

- ML

- 이것이 코딩테스트다

- scikit learn

- matplotlib

Archives

- Today

- Total

함께하는 데이터 분석

[Neural Networks and Deep Learning] 2주차 본문

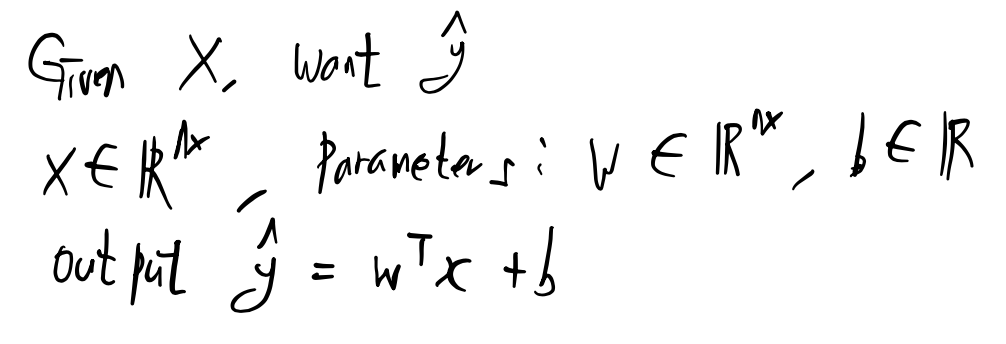

Linear Regression

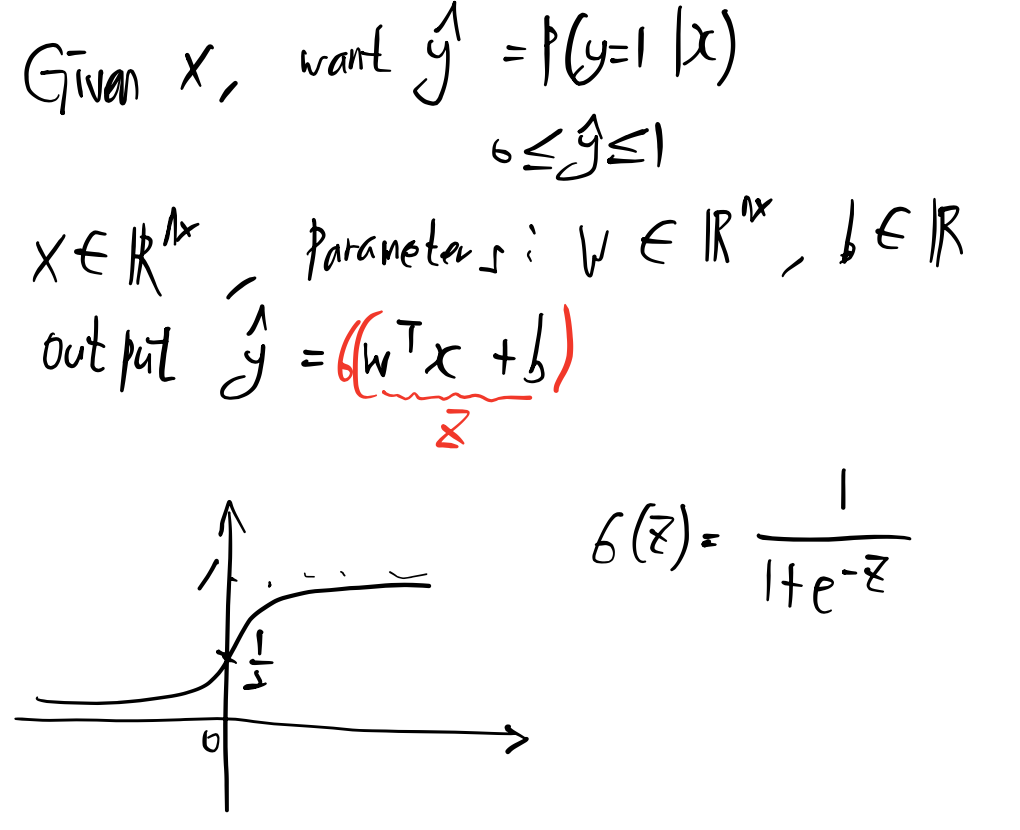

Logistic Regression

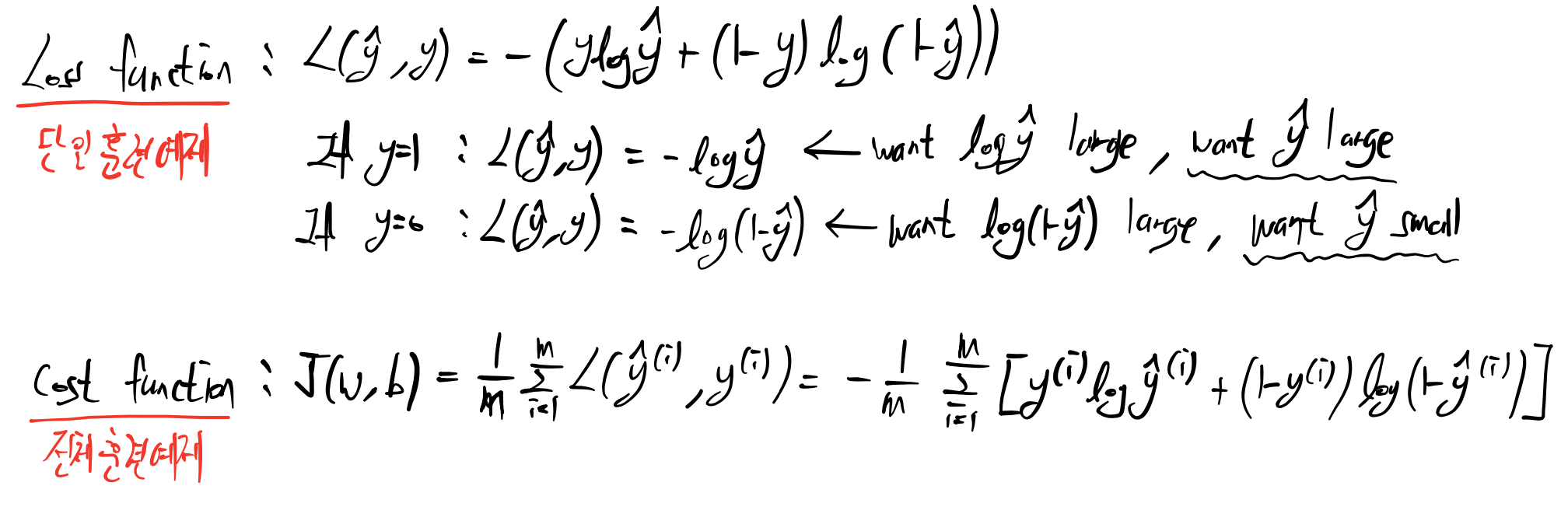

Logistic Regression cost function

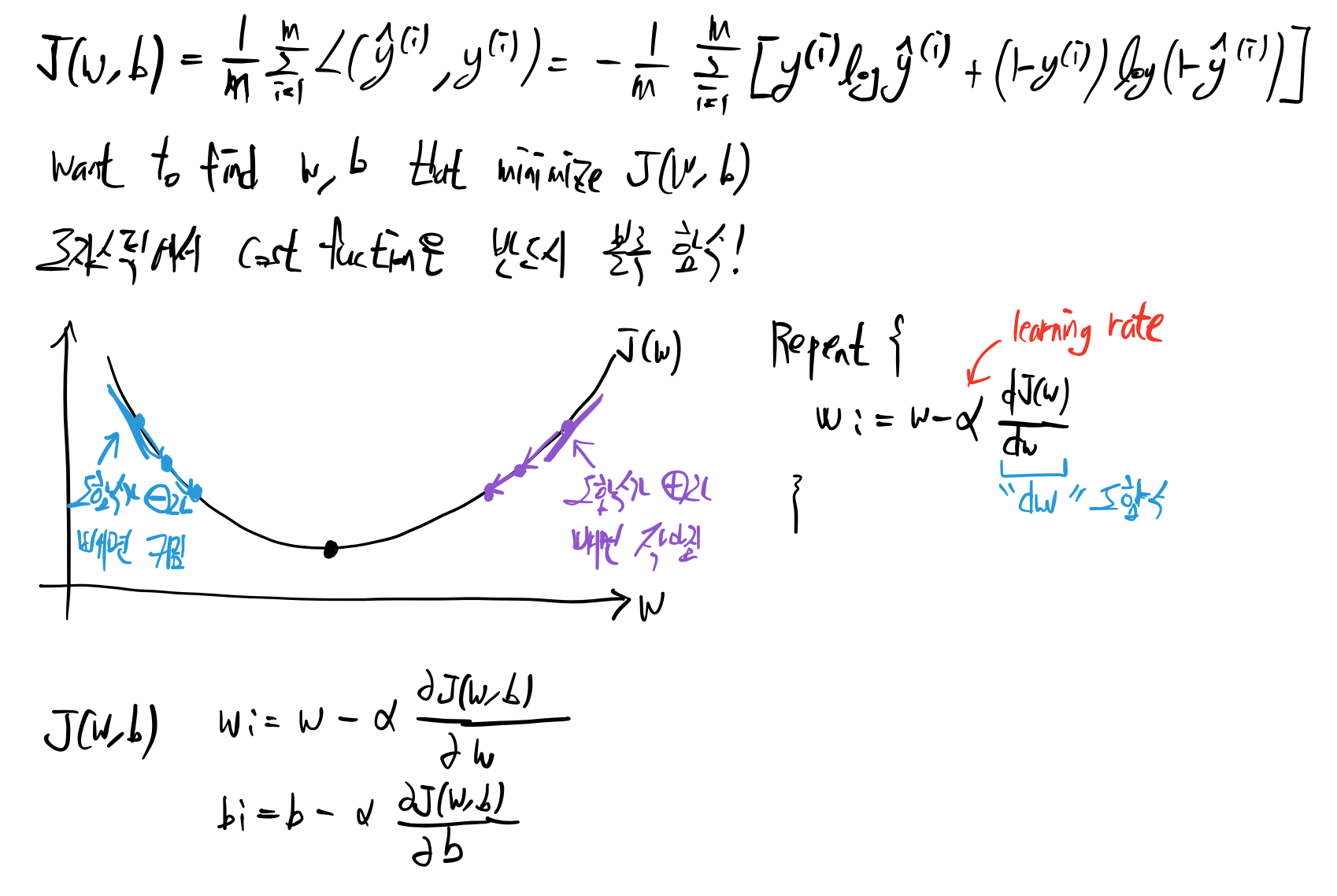

Gradient Descent

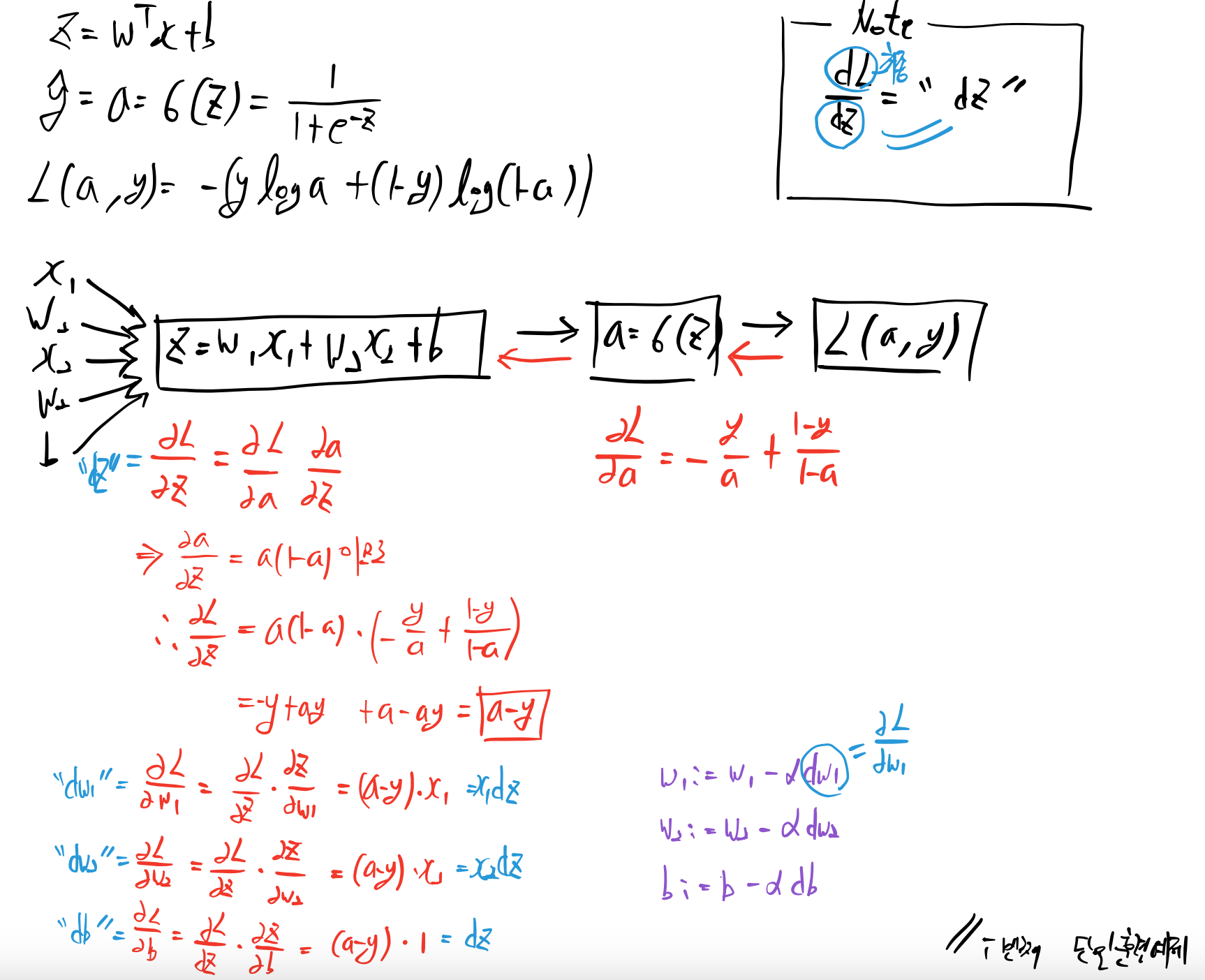

Logistic Regression Gradient Descent

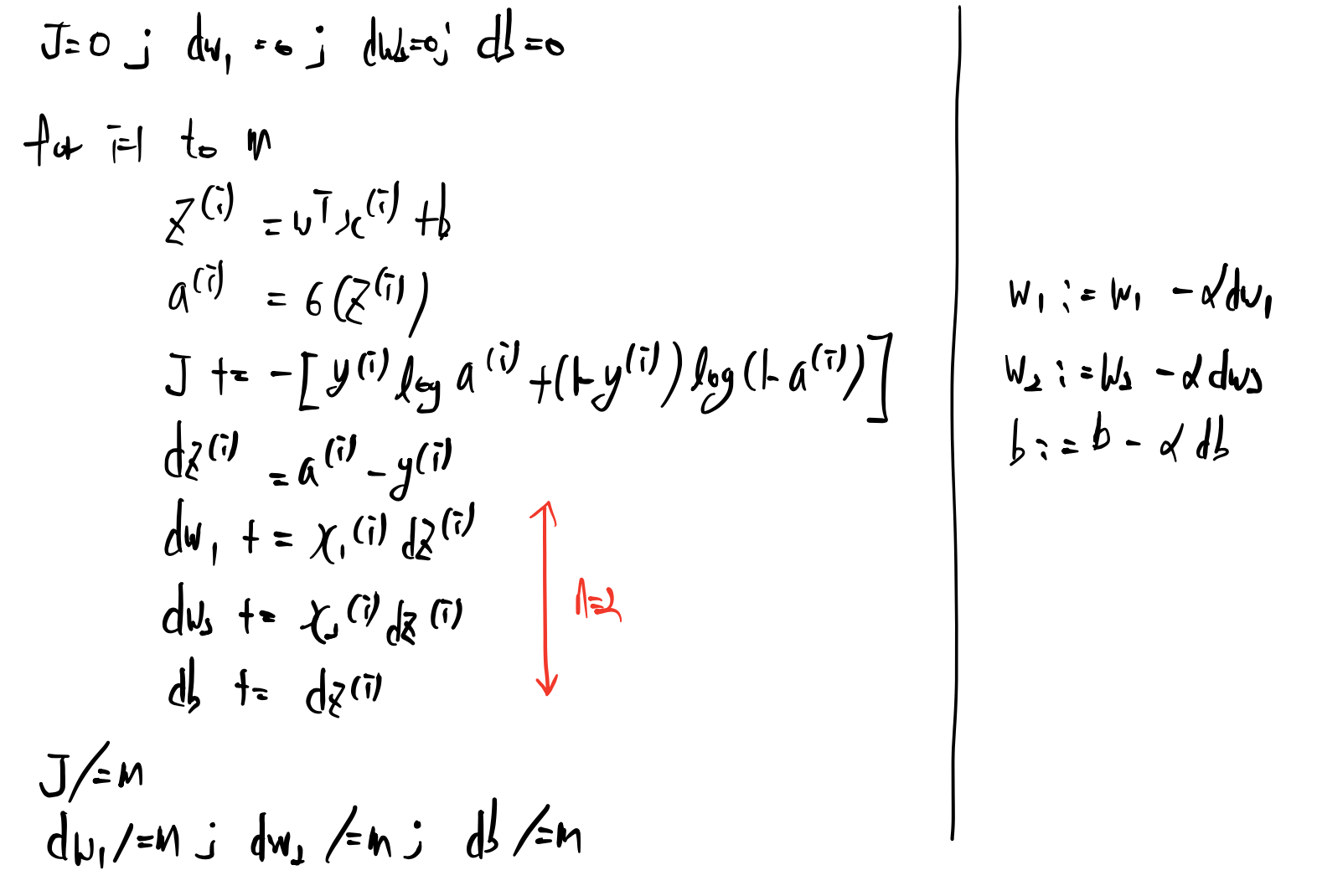

Logistic Regression Gradient Descent on m examples

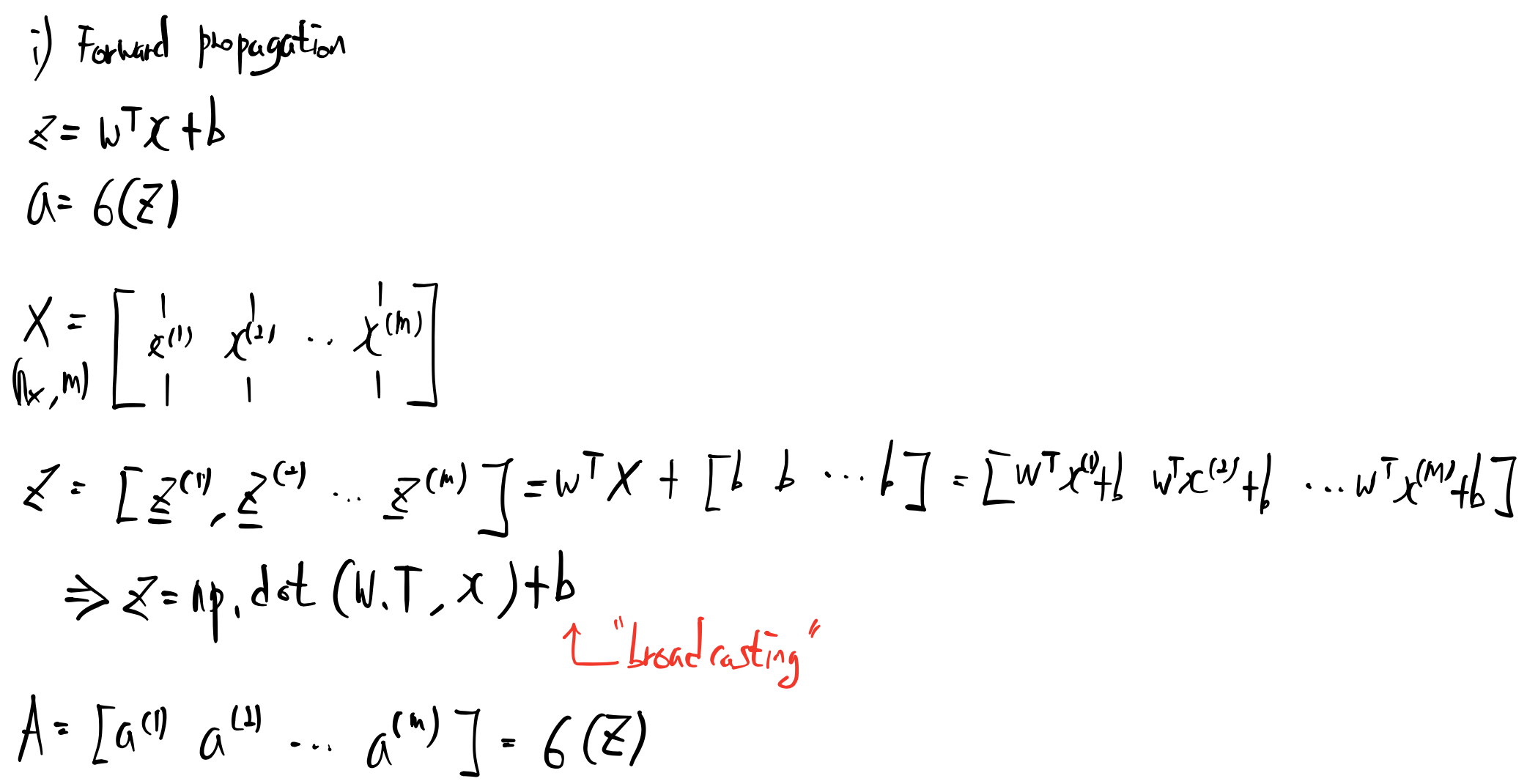

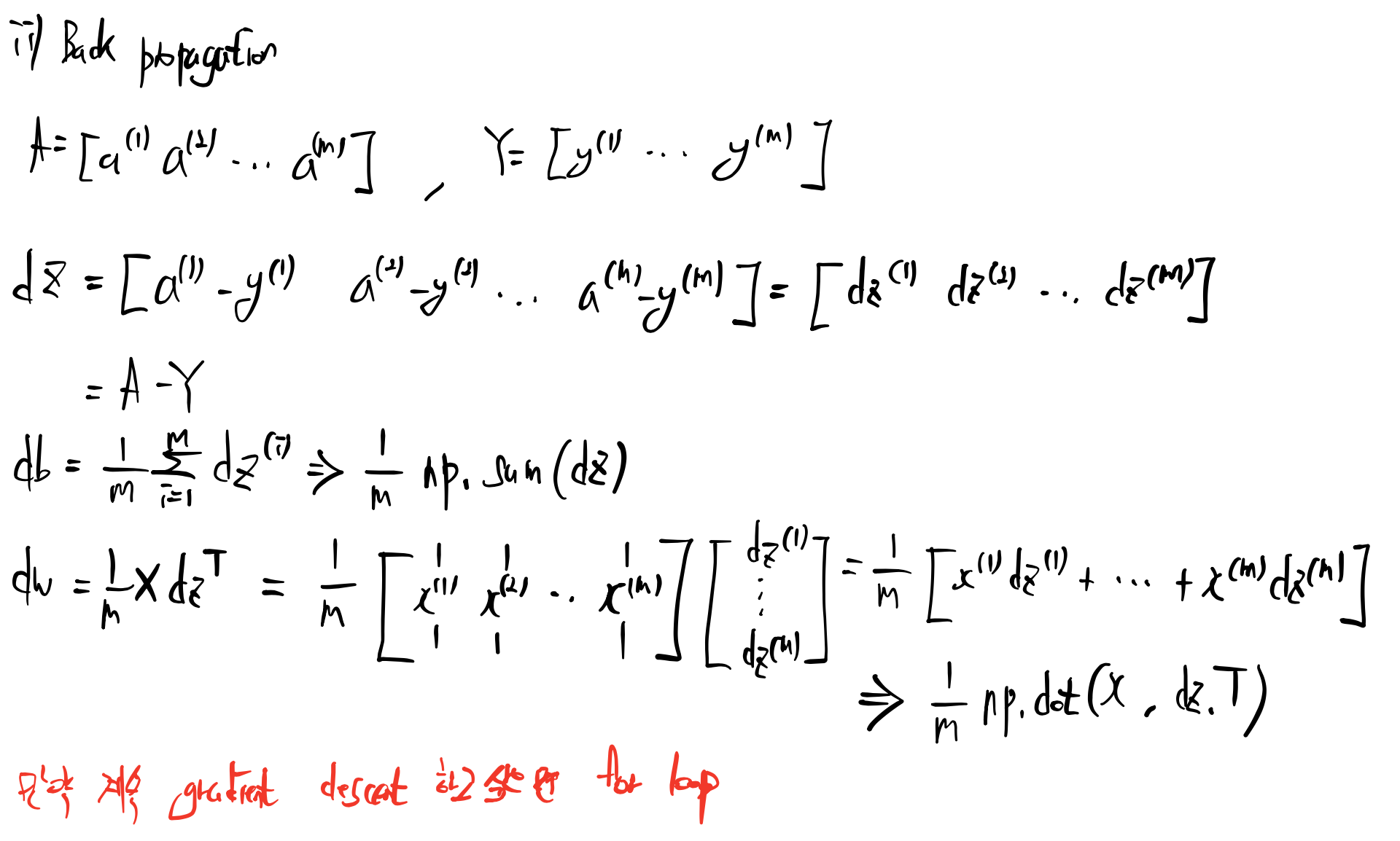

Vectorization

import numpy as np

import time

a = np.random.rand(1000000)

b = np.random.rand(1000000)

tic = time.time()

c = 0

for i in range(1000000) :

c += a[i] * b[i]

toc = time.time()

print('for loop :' + str(1000 * (toc - tic)) + 'ms')

print(c)

tic = time.time()

c = np.dot(a, b)

toc = time.time()

print('vectorization :' + str(1000 * (toc - tic)) + 'ms')

print(c)

>>> for loop :339.0049934387207ms

249929.39667635385

vectorization :1.4219284057617188ms

249929.39667634486Python Broadcasting

import numpy as np

A = np.array([[56.0, 0.0, 4.4, 68.0],

[1.2, 104.0, 52.0, 8.0],

[1.8, 135.0, 99.0, 0.9]])

summ = A.sum(axis = 0)

percent = 100 * A / summ

print(percent)

B = np.array([[1],

[2],

[3],

[4]])

print(B + 100)

C = np.array([[1, 2, 3],

[4, 5, 6]])

D = np.array([[100, 200, 300]])

print(C + D)

E = np.array([[100],

[200]])

print(C + E)

>>> [[94.91525424 0. 2.83140283 88.42652796]

[ 2.03389831 43.51464435 33.46203346 10.40312094]

[ 3.05084746 56.48535565 63.70656371 1.17035111]]

[[101]

[102]

[103]

[104]]

[[101 202 303]

[104 205 306]]

[[101 102 103]

[204 205 206]]Python-Numpy vectors

import numpy as np

a = np.random.randn(3).reshape(1, 3) # 행벡터인지 열벡터인지 반드시 명시

print(np.dot(a, a.T))

print(np.dot(a.T, a))

>>> [[1.45552316]]

[[ 0.82748124 0.70883268 -0.131336 ]

[ 0.70883268 0.60719656 -0.11250436]

[-0.131336 -0.11250436 0.02084536]]Vectorizing Logistic Regression

'부트캠프 > Google ML Bootcamp' 카테고리의 다른 글

| [Neural Networks and Deep Learning] 4주차 (0) | 2023.09.05 |

|---|---|

| [Neural Networks and Deep Learning] 3주차 (0) | 2023.09.04 |

| [Neural Networks and Deep Learning] 1주차 (0) | 2023.08.30 |

| [Google ML Bootcamp] 구글 머신러닝 부트캠프 합격후기 (0) | 2023.08.30 |